Nuclear fission, nanotechnologies, genetic engineering. They are all technologies that have been compared to a genie which mankind has let escape from a bottle to do either good or bad, depending on our ability to direct and control their power. Now, a new one can be added to that list: Artificial Intelligence, which has sparked a similar debate—how do you benefit from it without losing control over it?

The benefits of AI are clear and becoming more important, as Marko Erman, Chief Technology Officer of Thales, notes. “Artificial intelligence can save lives whether we are talking about split-second decisions which must be made to assure safety of people in transport of all kinds and in defence, as well as protecting critical infrastructures. AI also has a very constructive role to play across all fields of activities, ranging from developing new medicines to exploring solutions to climate change”.

However, some experts warn that we are in a race against time before AI is so intelligent on its own that we will lose the power to control it.

Don’t Believe that AI is Superior to Human Intelligence

Don’t believe that, says Marko Erman, for two reasons—the superior capacity of Biological Intelligence to learn from a variety of different types of incoming information as well as the much more advanced way our nervous system works compared to relatively primitive AI models.

He says: “Think of a baby learning to recognize its mother. The baby will process many simultaneous sources of information—the mother’s face of course but also the smell, the gestures and prior memories. A machine that recognizes faces simply doesn’t have those capabilities and it’s not at all likely that humans can even try to teach machines what is naturally human, instinctual, intuitive, and sentimental, not to even speak of true consciousness, conscience and free will.”

Marko Erman also points to the example of AI’s inherent inability to shift its learning from one context to another—a weakness that can be exploited to trick it through distorted data, a fault which AI applications must be developed to avoid.

“Take the case of a machine that has been trained to recognize lions in the jungle. If you put a truck in the picture of the jungle, the machine will report that it has spotted a lion.”

Marko Erman continues: “My neurosurgeon friends laugh when I ask them if Biological Intelligence is threatened by Artificial Intelligence.”, he says, “They point to our body’s nerve synapses whose complex functioning is simply incomparable to the primitive models of AI which humans have developed.”

He also points to the huge difference—by a factor of millions– between energy consumption of AI applications and the human synapse.

Thales Leadership in Making AI Accountable

There are two types of AI, and Thales is alone in its capability of putting both together to develop new solutions based on its long experience in each, says Marko Erman.

“That experience includes the continuous collection and analysis of vast amounts of data from sensors and radars so that our customers have all the information they need in an understandable format to make the right decisions at the right time.”

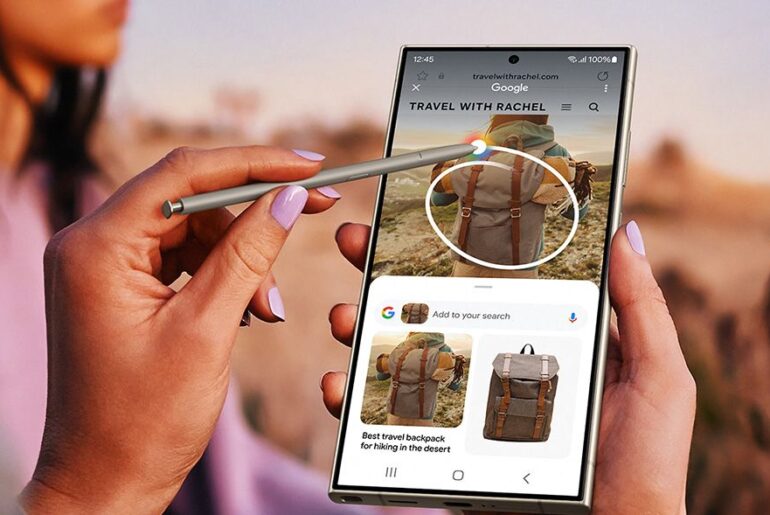

The first category of AI is symbolic (or also said to be based on models and knowledge), based on algorithms that Thales has created for such applications as preventive maintenance of railways, where AI processes information from sensors that can signal a problem of equipment that needs to be replaced.

The second category is empiric, a model in which Thales has also over thirty years of experience. That is based on loading big data from smart sensors, to cite one source of data, for analysis and learning for such uses as facial recognition, autonomous cars, and in defence.

So how do you reconcile the important benefits which AI can bring to a world with so many challenges, while making sure that can be trusted to provide accurate analysis on which to make critical decisions?

The word is ‘trust’, says Marko Erman.

“The more we become dependent on AI’s great capabilities, the more we will need to understand what the AI application is deciding—and why. That is why we at Thales are taking a leadership position in creating a dialogue with AI. In a word, we want AI to explain itself as it makes recommendations based on its analysis of data. That dialogue for continuous accountability is one of the ways in which we will make sure that AI continues to be dependent on us so that we can continue to benefit from its vast capabilities.”